This year HPG took place in Anaheim on July 19th-21st, collocating and running just prior to SIGGRAPH. The program is here.

Friday July 19

Advanced Rasterization

Moderator: Charles Loop, Microsoft Research

Theory and Analysis of Higher-Order Motion Blur Rasterization Site Slides

Carl Johan Gribel, Lund University; Jacob Munkberg, Intel Corporation; Jon Hasselgren, Intel Corporation; Tomas Akenine-Möller, Lund University/Intel Corporation

The conference started with a return to Intel’s work on Higher Order Rasterization. The presentation highlighted that motion is typically curved rather than linear and is therefore better represented by quadratics. The next part showed how to change the common types of traversal to handle this curved motion. The presenter demonstrated Interval and Tile based methods and how to extend them to handle quadratic motion. This section introduced Subdividable Linear Efficient Function Enclosures (SLEFES) which I’d not heard of before. SLEFES allows you to give tighter bounds on a function over an interval which are better than the convex hull of control points that you’d typically use – definitely something to look at later.

PixelPie: Maximal Poisson-disk Sampling with Rasterization Paper Slides (should be)

Cheuk Yiu Ip, University of Maryland, College Park; M. Adil Yalçi, University of Maryland, College Park; David Luebke, NVIDIA Research; Amitabh Varshney, University of Maryland, College Park

All Poisson-disk sampling talks start with a discussion of the basic dart-throwing and rejection based implementation first put forward in 1986, before going into the details of their own implementation. The contribution of this talk was the idea of using rasterization to maintain the minimum distance requirement. This is handled by rendering disks which will occlude each other if overlapping, where overlapping means too close – simple but effective. Of course there’s a couple of issues. Firstly there’s some angular bias due to the rasterization if the radius is small because of the projection of the disk’s edge to the pixels. The other problem was that even once you have a good set of initial points, there’s extra non-rasterization compute work to handle the empty space via stream compaction. One extra feature you get cheaply is support for importance sampling since you can change the size of each disk based on some additional input. This was shown by using the technique to select points that map to features on images – something I’d not seen before.

Out-of-Core Construction of Sparse Voxel Octrees Paper Slides

Jeroen Baert, Department of Computer Science, KU Leuven; Ares Lagae, Department of Computer Science, KU Leuven; Philip Dutré, Department of Computer Science, KU Leuven

The fundamental contribution from this talk was the use of Morton ordering when partitioning the mesh to minimize the amount of local memory when voxelising. One interesting side effect of this memory reduction is improved locality resulting in faster voxelization. In the example cases, this meant that the tests with 128MB were quicker than 1GB or 4GB. The laid back nature of the presenter and the instant results made it feel like you could go implement it right now, but then the source was made available taking the fun out of that idea!

Shadows

Moderator: Samuli Laine, NVIDIA Research

Screen-Space Far-Field Ambient Obscurance Slides Site including source Paper (Video)

Ville Timonen, Åbo Akademi University

The first thing to note is the difference between occlusion and obscurance; obscurance includes a falloff term such as a distance weight. The aim is to find a technique that can operate over greater distances, highlighting the issues previous techniques where direct sampling misses important values and the alternative of mipmapping average, minimum or maximum depth result in either flattening, or over or under occlusion. The contribution of this talk was to focus on the details important for AO based on scanning the depth map in multiple directions. This information is then converted into prefix sums to easily get the range of important height samples across a sector. The results of the technique were shown to be closer to ray traces of a depth buffer than the typical mipmap technique. One other thing I noticed was the use of a 10% guard band, so from 1280×720 (921600 pixels) to 1536×864 (1327104), a 44% increase in pixels! Another useful result was a comment from the presenter that it’s better to treat possibly occluding surfaces as a thin shell rather than a full volume since the eye notices incorrect shadowing before incorrect lighting.

Imperfect Voxelized Shadow Volumes Paper

Chris Wyman, NVIDIA; Zeng Dai, University of Iowa

The aim of this paper was interactive performance or better when generating a shadow volume per virtual point light (VPL) on an area light. The initial naive method, one voxelized shadow volume per point light, ran at less than 1 FPS. The problem is how to handle many VPLs. The first part of the solution is imperfect shadow maps (ISMs), a technique for calculating and storing lots of small shadow maps generated from point splats within the scene with the gaps filled in (Area Lights are actually described as another application in the ISM paper). After creating an ISM, each shadow sub-map can processed in parallel. The results looked good with a lot of maps and there’s the ability to balance the number of maps against their required size in the ISM. For example, a sharper point light could use the entire ISM space for a single map for sharpness, but a more diffuse light with many samples could pack more smaller maps into the ISM.

Panel: High-Performance Graphics in Film

Moderator: Matt Pharr

Dreamworks, Eric Tabellion; Weta Digital, Luca Fascione; Disney Animation, David Adler / Rasmus Tamstorf; Solid Angle, Thiago Ize / Marcos Fajardo

Introductions:

Disney

Use OpenGL in some preview tools

Major GPU challenges are development and deployment

They are interested in the use of compute and are hiring a research scientist

PDI Dreamworks

OpenGL display pipeline for tools

Useful for early iterations

Also mentioned Amorphous – An OpenGL Sparse Volume Renderer

Weta

Highlighted that the production flow included kickback loop where everything fed back to an earlier stage

Not seeing GPU as an option

Arnold

Long code life – can’t be updated to each new language/driver

Reuse of hardware too

Highlighted that 6GB GPUs cost $2k (and I was thinking a PS4 was much less than that and had more memory)

Preview lighting must be accurate including errors of final render

Questions: (replies annotated with speaker/company where possible)

How much research is reused in Film?

Tabellion: The relevant research is used.

Disney: Other research used, not just rendering i.e. physics

Thiago: Researchers need access to data

Kayvon: Providing content to researchers has come up before. And the access to the environment too – lots of CPUs.

Tabellion: Feels that focus on research may be more towards games at HPG

Need usable licenses and no patents

Lots of work focused on polys and not on curves

Need to consider performance and memory usage of larger solutions

Convergence between films and games

Tabellion: Content production – game optimize for scene, film is many artists in parallel with no optimisation

Rasmus: Both seeing complexity increase

Weta: More tracking than convergence. Games have to meet hard limit of frame time

Discussion of Virtual Production

Real time preview of mocap in scene

With moveable camera tracked in the stage

Separate preview renderer?

Have to maintain 2 renderers

Using same [huge] assets – sometimes not just slow to render but load too

Difficult to match final in real time now moving to GI and ray tracing

Work to optimise management of data

Lots of render nodes want the same data

Disney: Just brute forces it

Weta: Don’t know of scheduler that knows about the data required. Can solve abstractly but not practically. Saw bittorrent-like example.

What about exploiting coherence?

Some renders could take 6-10 hours, but need the result next day so can’t try putting two back-to-back

Do you need all of the data all of the time? Could you tile the work to be done?

Not in Arnold – need all of the data for possible intersections

Needs pipeline integration, render management

Example of non water tight geometry – solving in Arnold posted to JCGT (Robust BVH Ray Traversal)

Missing ray intersection can add minutes of pre processing and gigs of memory

Double precision?

Due to some hacks when using floats, you could have done it just as fast in double instead

Arnold: Referred to JCGT paper

Disney: Don’t have to think when using doubles

Tabellion: Work in camera space or at focal point

Expand bvh by double precision – fail – look up JCGT paper

Saturday July 20

Keynote 1: Michael Shebanow (Samsung): An Evolution of Mobile Graphics Slides

Not a lot to report here and the slides cover a lot of what was said.

Fast Interactive Systems

Moderator: Timo Alia, NVIDIA Research

Lazy Incremental Computation for Efficient Scene Graph Rendering Slides Paper

Michael Wörister, VRVis Research Center; Harald Steinlechner, VRVis Research Center; Stefan Maierhofer, VRVis Research Center; Robert F. Tobler, VRVis Research Center

The problem with the scenegraph traversal in this case was the cost. The aim was to reduce this cost by maintaining an external optimized structure and propagate changes from the scenegraph to this structure. Most of the content was based on how to minimize the cost of keeping the two structures synchronized and the different techniques. Overall, using the caching did improve performance since it enabled a set of optimizations. Despite the relatively small amount of additional memory required, I did note a 50% increase in startup time was mentioned.

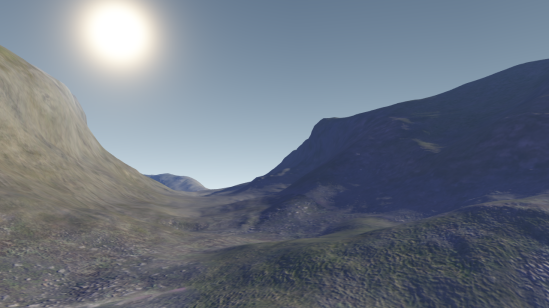

Real-time Local Displacement using Dynamic GPU Memory Management Site

Henry Schäfer, University of Erlangen-Nuremberg; Benjamin Keinert, University of Erlangen-Nuremberg; Marc Stamminger, University of Erlangen-Nuremberg

The examples for this paper were footsteps in terrain, sculpturing and vector displacement. The displacements are stored in a buffer dynamically allocated from a larger memory area and then sampled when rendering. The storage of the displacement is based on an earlier work by the same authors: Multiresolution Attributes for Tessellated Meshes. The memory management part of the work seems quite familiar having seen quite a few presentations on partially resident textures. The major advantage is that the management can take place GPU side, rather than needing a CPU to update memory mapping tables.

Real-Time High-Resolution Sparse Voxelization with Application to Image Based Modeling (similar site)

Charles Loop, Microsoft Research; Cha Zhang, Microsoft Research; Zhengyou Zhang, Microsoft Research

Ths presentation introduced an MS research project using multiple cameras to generate a voxel representation of a scene that could be textured. The aim was a possible future use as a visualization of remote scenes for something like teleconferencing. The voxelization is performed on GPU based on the images from the cameras and the results appear very plausible with only minor issues on common problem areas such as hair. It looks like fun going on the videos of the testers using it.

Building Acceleration Structures for Ray Tracing

Moderator: Warren Hunt, Google

Efficient BVH Construction via Approximate Agglomerative Clustering Slides Paper

Yan Gu, Carnegie Mellon University; Yong He, Carnegie Mellon University; Kayvon Fatahalian, Carnegie Mellon University; Guy Blelloch, Carnegie Mellon University

This work extends the agglomerative clustering described in Bruce Walter et al’s 2008 paper Fast Agglomerative Clustering for Rendering to improve performance by exposing additional parallelism. The parallelism comes from partitioning the primitives to allow multiple instances of the agglomeration to run in their own local partition. This provides a greater win at the lower level where most of the time is typically spent. The sizing of the partitions and number of clusters in each partition leads to a parameters that can be tweaked to provide choices between speed and quality.

Fast Parallel Construction of High-Quality Bounding Volume Hierarchies Slides Page

Tero Karras, NVIDIA; Timo Aila, NVIDIA

This presentation started with the idea of effective performance, based on the number of rays traced per unit rendering time, but rendering time includes the time to build your bounding volume hierarchy as well as the time to intersect rays with that hierarchy, so you need to balance speed and quality of the BVH. This work takes the idea of building a fast low quality BVH (from the same presenter at last year’s HPG – Maximizing Parallelism in the Construction of BVHs, Octrees, and kd Trees) and then improving the BVH by optimizing treelets, subtrees of internal nodes. Perfect optimization of these treelets is NP-hard based on the size of the treelets so instead they iterate 3 times on treelets with a maximum size of 7 nodes – which actually has 10K possible layouts! This gives a good balance between performance and diminishing returns. The presentation also covers a practical implementation of splitting triangles with bounding boxes that are a poor approximation to the underlying triangle.

On Quality Metrics of Bounding Volume Hierarchies Slides Page

Timo Aila, NVIDIA; Tero Karras, NVIDIA; Samuli Laine, NVIDIA

This presentation started with an overview of the Surface Area Heuristic (SAH), which gives great results despite the questionable assumptions on which it rests. To check how well the SAH actually correlates with performance, they tested multiple top-down BVH builders and calculated how the surface area heuristic predicted the ray intersection performance of the BVH from the builder for multiple scenes. A lot of the results correlated well, but the San Miguel and Hairball scenes typically showed a loss of correlation which indicated that maybe SAH doesn’t give a complete picture of performance. Reconsidering the work done in ray tracing, an additional End Point Overlap metric was introduced for handling the points at each end of the ray which appears to improve the correlation. This was then further supplemented with another possible contribution to the cost, leaf variability, which was introduced to account for how the resulting BVH affects SIMD traversal. This paper reminded me of the Power Efficiency for Software Algorithms running on Graphics Processors paper from the previous year, leading us to question the basis for how we evaluate our work.

Hot3D

Michael Mantor, Senior Fellow Architect (AMD): The Kabini/Temash APU: bridging the gap between tablets, hybrids and notebooks

Marco Salvi (Intel): Haswell Processor Graphics

John Tynefield & Xun Wang (NVIDIA): GPU Hardware and Remote Interaction in the Cloud

Hot3D is a session that typically gives a lot of low level details about the latest hardware or tech. AMD started by introducing the Kabini/Temash APU. This was the most technical of the talks, discussing the HD 8000 GPU which features their Graphics Core Next (GCN) architecture and asynchronous compute engines – all seems quite familiar really. Intel were next discussing Haswell and covering some of the mechanisms used for lowering power usage and allowing better power control, such as moving the voltage regulator from motherboard. Marco also mentioned the new Pixel Sync features of Haswell which was covered at many times during HPG and SIGGRAPH. NVIDIA were last in this section and they presented some of their cloud computing work.

Sunday July 21st

Keynote 2: Steve Seitz (U. Washington (and Google)): A Trillion Photos (Slides very similar to EPFL 2011)

Very similar to Alexei’s presentation from EGSR last year (Big Data and the Pursuit of Visual Realism), Steve wowed the audience with the possibilities available when you have the entirety of the images from Flickr available and know the techniques you need to match them up. Scale-invariant feature transform (SIFT) was introduced first. This (apparently patented) technique detects local features in images then uses this description to identify similar features in other images. The description of the features was described as a histogram of edges. This was shown applied to images from the NASA Mars Rover to match locations across images. Next Steve introduced Structure from Motion which allows the reconstruction of an approximate 3D environment based on multiple 2D images. This allowed the Building Rome in a day project which reconstructed the landmarks of Rome based on the the million photos of Rome in Flickr in 24 hours! This was later followed by a Rome on a Cloudless day project that produced much denser geometry and appearance information. Steve also referenced other work by Yasutaka Furukawa on denser geometry generation such as Towards Internet-scale Multi-view Stereo which later lead to the tech for GL maps in Google Maps. One of the last examples was a 3D Wikipedia that could cross reference text with a 3D reconstruction of a scene from photos where auto-detected keywords could be linked to locations in the scene.

Ray Tracing Hardware and Techniques

Moderator: Philipp Slusallek, Saarland University

SGRT: A Mobile GPU Architecture for Real-Time Ray Tracing Slides Page

Won-Jong Lee, SAMSUNG Advanced Institute of Technology; Youngsam Shin, SAMSUNG Advanced Institute of Technology; Jaedon Lee, SAMSUNG Advanced Institute of Technology; Jin-Woo Kim, Yonsei University; Jae-Ho Nah, University of North Carolina at Chapel Hill; Seokyoon Jung, SAMSUNG Advanced Institute of Technology; Shihwa Lee, SAMSUNG Advanced Institute of Technology; Hyun-Sang Park, National Kongju University; Tack-Don Han, Yonsei University

Similar to last years talk, the reasoning behind aiming for mobile realtime ray tracing was better quality for augmented reality which also reminds me of Jon Olick’s Keynote from last year and his AR results. The solution presented was the same hybrid CPU/GPU solution with updates from SIGGRAPH Asia from the Parallel-pipeline-based Traversal Unit for Hardware-accelerated Ray Tracing presentation which showed performance improvements with coherent rays by splitting the pipeline into separate parts, such as AABB or leaf tests, to allow rays to be iteratively processed in one part without needing to occupy the entire pipeline.

An Energy and Bandwidth Efficient Ray Tracing Architecture Slides Page

Daniel Kopta, University of Utah; Konstantin Shkurko, University of Utah; Josef Spjut, University of Utah; Erik Brunvand, University of Utah; Al Davis, University of Utah

This presentation was based on TRaX (TRaX: A Multi-Threaded Architecture for Real-Time Ray Tracing from 2009) and investigating how to reduce energy usage without reducing performance. Most of the energy usage is in data movement so the main aim is to change the pipeline to use macro instructions which will perform multiple operations without needing to write intermediate operands back to the register file. Also, the new system is treelet based since they can be streamed in and remain in L1 cache. The result was a 38% reduction in power with no major loss of performance.

Efficient Divide-And-Conquer Ray Tracing using Ray Sampling Slides Page

Kosuke Nabata, Wakayama University; Kei Iwasaki, Wakayama University/UEI Research; Yoshinori Dobashi, Hokkaido University/JST CREST; Tomoyuki Nishita, UEI Research/Hiroshima Shudo University

Following last year’s SIGGRAPH Naive Ray Tracing: A Divide-And-Conquer Approach presentation by Benjamin Mora, this research focuses on problems discovered with the initial implementation. These problems stem from inefficiencies when splitting geometry without considering the coherence in the rays and low quality filtering during ray division which can result in only a few rays being filtered against geometry. The fix is to select some sample rays, generate partitioning candidates to create bins for the triangles, then use the selected samples to calculate inputs for a cost function to minimize. While discussing this cost metric, they mentioned the poor estimates of the SAH metric with non-uniform ray distributions, seeming timely with Timo’s earlier presentations. The samples can also indicate which child bounding box to traverse first. The results look good although it appears to work best with incoherent rays which have a lot of applications in ray tracing after dealing with primary paths.

Megakernels Considered Harmful: Wavefront Path Tracing on GPUs Slides Page

Samuli Laine, NVIDIA; Tero Karras, NVIDIA; Timo Aila, NVIDIA

A megakernel is a ray tracer with all of the code in a single kernel which is bad for several reasons; instruction cache thrashing, low occupation due to register consumption, and divergence. In the case of this paper, one of the materials shown is a beautiful 4 layer car paint whose shader was white and green specks of code on a powerpoint slide. A pooling mechanism (maintaining something like a million paths) is used to allow the raytracing to queue similar work to be batch processed by smaller kernels performing path generation or material intersection, reducing the amount of code and registers required and minimizing divergence. The whole thing sounds very similar to the work queuing performed in hardware by GPUs until there is sufficient work to kick off a wavefront, nicely described by Fabian Giesen in his Graphics Pipeline posts. It would be good to know what the hardware ray tracing guys think of these results since the separation of the pipeline appears similar to Won-Jong Lee’s parallel pipeline traversal unit.

Panel: Hardware/API Co-evolution

Moderator: Peter Glaskowsky (replies annotated with speaker/company where possible)

ARM: Tom Olson, Intel: Michael Apodaca, Microsoft: Chas Boyd, NVIDIA: Neil Trevett, Qualcomm: Vineet Goel, Samsung: Michael Shebanow

Introduction – Thoughts on API HW Evolution

AMD: deprecate cost of API features

Tom Olson: Is TBDR dead with tessellation? Is tessellation dead?

Intel: Memory is just memory. Bindless and precompiled states.

Microsoft: API as convergence.

NVIDIA: Power and more feedback to devs

Qualcomm: Showed GPU use cases

Samsung: Reiterated that APIs are power inefficient as mentioned in keynote

Power usage?

AMD: Good practice. Examples of power use.

ARM: We need better IHV tools

Intel, Microsoft, NVIDIA: Agree

NVIDIA: OpenGL 4 efficient hinting is difficult

Qualcomm: Offers tile based hints

Samsung: Need to stop wasting work

Charles Loop: Tessellation not dead. Offers advantages, geometry internal to GPU, don’t worry about small tris and rasterise differently – derivatives

? Possibly poor tooling

? Opensubdiv positive example of work being done

Tom: Not broken but needs tweaking

Expose query objects as first class?

Chas: typically left to 3rd parties

Not really hints but required features

When will we see tessellation in mobile? Eg on 2W rather than 200W

Qualcomm: Mobile content different

Neil: Tessellation can save power

Chas: quality will grow

Tom: Mobile evolving differently due to ratios

Able to get info on what happens below driver?

? Very complex scheduling

What about devs that don’t want to save power?

Tom: It doesn’t matter to $2 devs, but AAA

Chas: Devs will become more sensitive

Ray tracing in hardware? Current API

Chas: Don’t know but could add minor details to gpus

Samsung: RT needs all the geometry

SOC features affect usage?

Qualcomm: Heterogenous cores to be exposed to developers

Shared/unified memory?

AMD: Easy to use power

Neil: Yes we need more tools

What about lower level access?

…

Best Paper Award

All 3 places went to the NVIDIA raytracing team:

1st: On Quality Metrics of Bounding Volume Hierarchies Timo Aila, Tero Karras, Samuli Laine

2nd: Megakernels Considered Harmful: Wavefront Path Tracing on GPUs Samuli Laine, Tero Karras, Timo Aila

3rd: Fast Parallel Construction of High-Quality Bounding Volume Hierarchies Tero Karras, Timo Aila

Next year

HPG 2014 is currently expected to be Lyon during the week of 23-27 June. Hope to see you there!